The ATxAI 2024 conference, part of the ATxSummit, was held on 31 May 2024 at Capella Singapore. This global flagship conference on AI governance brought together respected visionaries, industry leaders, practitioners, experts and policymakers in a series of discussions on building trustworthy AI, exploring both opportunities and challenges.

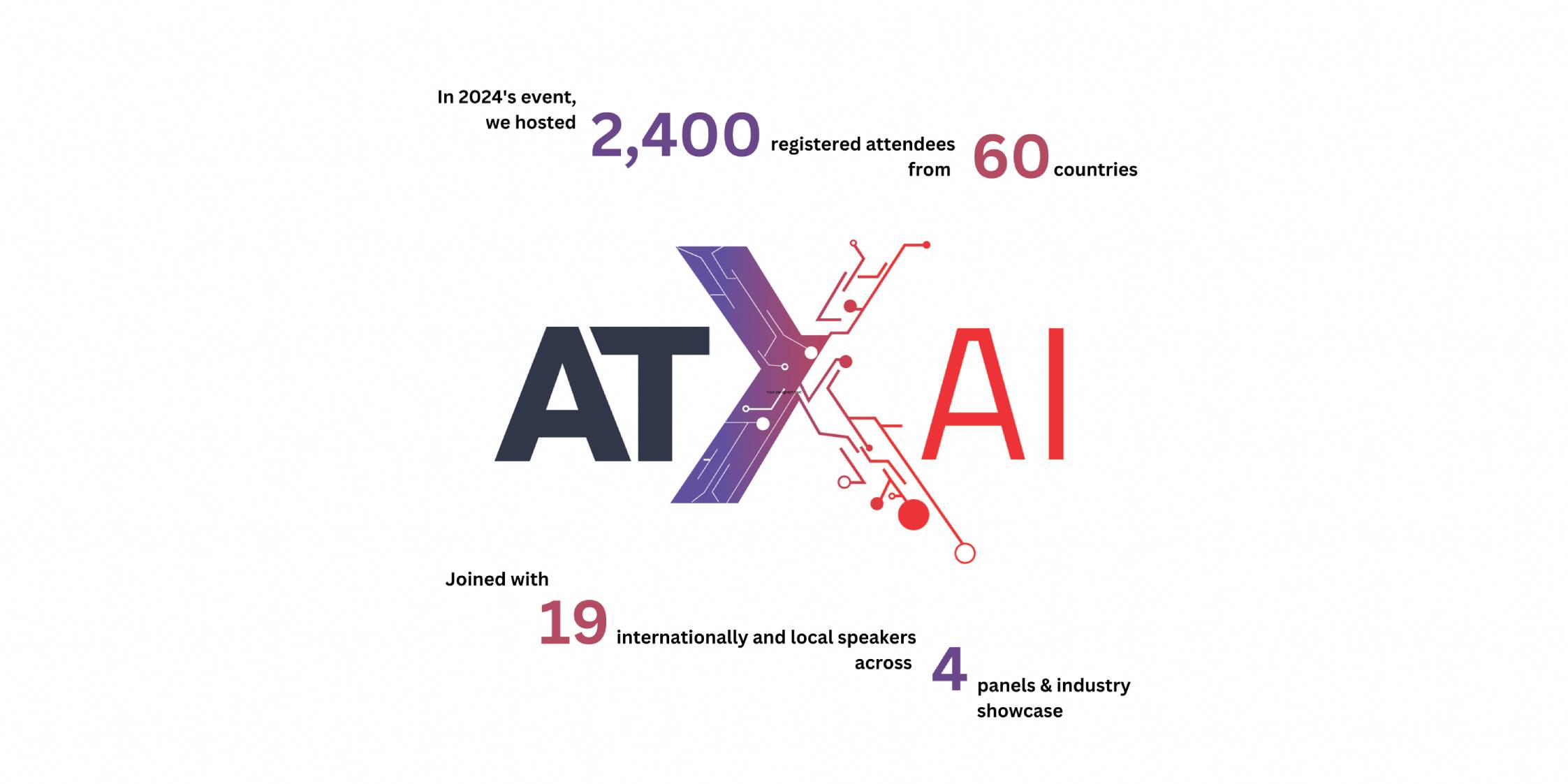

The conference was packed, with many attendees eager to hear the insights from 17 international and local speakers across 3 panels.

The first panel session titled That’s Not Taylor Swift – Governance in an Era of Deepfakes discussed the transformative potential of Generative AI and the accompanying risks where the line between fabrication and reality is blurring. It is now harder than ever for consumers to distinguish between AI-generated and original content. Stefan Schnorr noted that deepfakes posed risks to democratic processes, resulting in an erosion of trust. With the growing sophistication of deepfakes, Yi Zeng cautioned that “seeing was not believing” and highlighted how the general public did not necessarily understand the impact of deepfakes on human decision-making.

The panellists emphasised that while technical solutions like provenance technologies and digital signatures were important, no one intervention would be a silver bullet. They stressed the importance of pairing technical solutions with resilient policy frameworks. Natasha Crampton emphasised the need for global cooperation, citing the AI Elections Accord signed at the Munich Security Conference as a model for collective action against deepfakes. Echoing this sentiment, Hiroaki Kitano called for a shift from international dialogue to concrete action.

Watch panel 1 here:

A highlight of ATxAI was the demonstration of Project Moonshot, an initiative that extends the Foundation’s commitment to responsible AI into the realm of generative AI. IMDA’s Thomas Tay showed how Project Moonshot would help to address both the significant opportunities and associated risks of generative AI. Project Moonshot’s key benefit lies in empowering companies to make informed decisions in selecting the best large language model for their business goals. Moreover, it ensures that applications developed on these models will be robust and safe. This approach enables businesses to harness the power of generative AI while maintaining high standards of safety and reliability.

The second panel session titled Responsible AI – Evaluation Tools, Standards and Technology tackled the critical issue of AI safety in light of rapid technological advancements. The discussion looked at how organisations could envision AI safety, likening it to a “tech stack,” where various stakeholders collaborate to create a comprehensive safety framework. This approach brings together policymakers, standards development organisations (SDOs), regulators and third-party testers. Wael William Diab highlighted the efficiency of the ISO ecosystem in addressing AI safety, noting how it leverages existing standards from related fields like information security. Peter Mattson shared insights on MLCommons’ approach to AI safety, stating it was challenging to define what AI safety was since AI could be likened to a black box. Safety benchmarks hence acted as a standard of measurement on how safe AI systems could be, which was important in the development of AI technical standards.

Watch panel 2 here:

Wrapping up ATxAI 2024 was the last panel titled Full Speed Ahead – Accelerating the Science Behind Responsible AI, which explored how organisations, societies and countries could come together to address AI safety issues. Elizabeth Kelly said that AI, as a global technology, demands global solutions. Any meaningful work on AI safety requires collaboration across global AI safety institutes. Both Lin Yonghua and Akiko Murakami highlighted how China and Japan addressed localisation issues where they had to develop their own large language models and make additional efforts to ensure that the model was aware of cultural sensitivities.

The importance of culture in AI development emerged as a key theme. Petri Myllymaki cited the interim United Nations report on governing AI for humanity, noting that the report overlooked the cultural dimension. Culture extends beyond language, encompassing diverse values and norms across regions. This diversity renders a one-size-fits-all approach ineffective.

Another important factor in ensuring AI safety was open-source models and benchmarks. Lam Kwok Yam emphasised that open-source models provide a crucial starting point for organisations lacking the means to develop and train their own AI models.

The last panel closed with the panellists taking questions from the floor. One attendee inquired about the enforcement of voluntary benchmarks and restrictions and their effectiveness in deterring bad actors. Elizabeth Kelly responded by citing the US’ AI Safety Institute. She noted that although its guidance was not legally binding, the industry has shown substantial compliance, driven by a collaborative spirit.

IMDA’s Assistant Chief Executive Ong Chen Hui, who moderated the panel, concluded with a balanced perspective. She stressed that AI governance extends beyond legislation. Voluntary commitment is just as important and effective in driving innovation in AI Safety.

Watch panel 3 here:

No posts found!

Your organisation’s background – Could you briefly share your organisation’s background (e.g. sector, goods/services offered, customers), AI solution(s) that has/have been developed/used/deployed in your organisation, and what it is used for (e.g. product recommendation, improving operation efficiency)?

Your AI Verify use case – Could you share the AI model and use case that was tested with AI Verify? Which version of AI Verify did you use?

Your experience with AI Verify – Could you share your journey in using AI Verify? For example, preparation work for the testing, any challenges faced, and how were they overcome? How did you find the testing process? Did it take long to complete the testing?

Your key learnings and insights – Could you share key learnings and insights from the testing process? For example, 2 to 3 key learnings from the testing process? Any actions you have taken after using AI Verify?