IBM, the multinational technology giant headquartered in Armonk, New York, is renowned for many of its innovative technology solutions, including AI. One of IBM’s key offerings is Industry Accelerators, a comprehensive set of tools that enable users to analyse data, build models, and display results to tackle common business issues.

IBM is dedicated to developing and promoting trustworthy AI – AI that is transparent, explainable, fair, and secure. The company believes that ethical AI practices are essential for building public trust in AI systems. IBM also advocates for the adoption of industry-wide standards and best practices for AI development and deployment. As part of its ongoing commitment to ethical AI, joining AI Verify international pilot was a natural step for IBM.

IBM piloted AI Verify testing framework on credit risk:

IBM’s Positive Feedback on the Testing Process and potential areas of enhancements:

Based on IBM’s experience, some areas of the Minimum Viable Product (MVP) could benefit from crowd-sourcing international efforts as the sciences for such testing are nascent. In particular, for technical testing of robustness, explainability, and fairness:

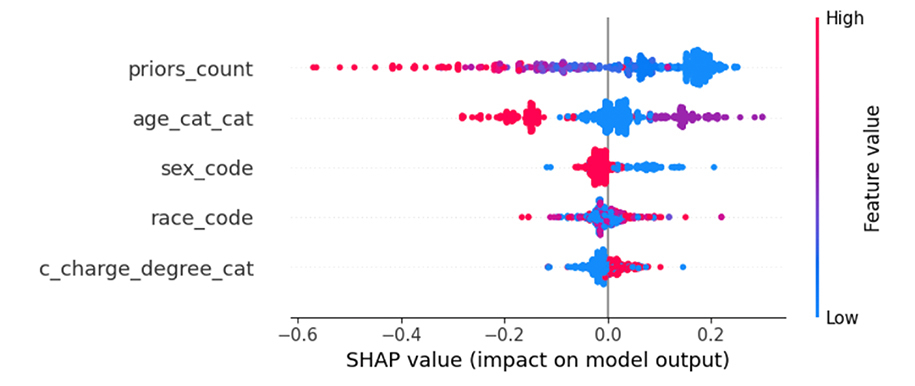

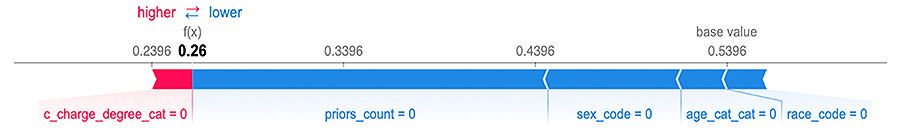

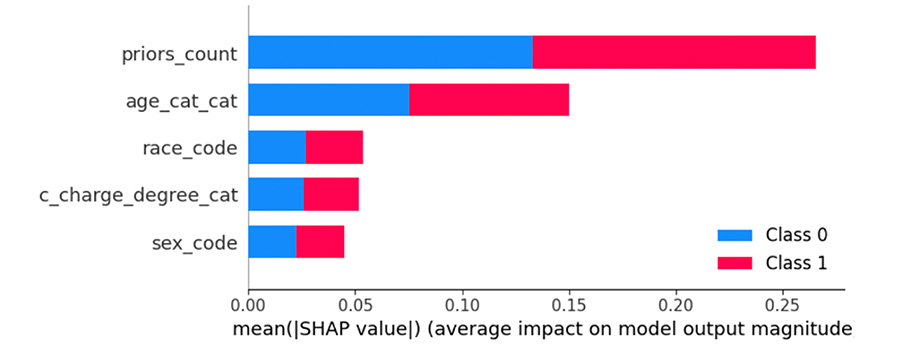

Examples of Dot plot, Force plot and Bar plot in AI Verify MVP:

IBM’s commitment to trustworthy AI reflects its belief that AI has the potential to transform society in positive ways, but only if it is developed and deployed in a responsible and ethical manner.

AI Verify is indeed a good start towards the implementation of AI governance. IBM would be supporting this framework in its AI governance platform and continuous monitoring framework.

Your organisation’s background – Could you briefly share your organisation’s background (e.g. sector, goods/services offered, customers), AI solution(s) that has/have been developed/used/deployed in your organisation, and what it is used for (e.g. product recommendation, improving operation efficiency)?

Your AI Verify use case – Could you share the AI model and use case that was tested with AI Verify? Which version of AI Verify did you use?

Your experience with AI Verify – Could you share your journey in using AI Verify? For example, preparation work for the testing, any challenges faced, and how were they overcome? How did you find the testing process? Did it take long to complete the testing?

Your key learnings and insights – Could you share key learnings and insights from the testing process? For example, 2 to 3 key learnings from the testing process? Any actions you have taken after using AI Verify?