With the rise in global AI adoption, particularly in high-risk sectors and use cases, building trust is critical to ensure that both consumers and organisations benefit from adopting AI.

As a global, independent third-party AI test lab that verifies the performance of AI algorithms, Resaro focuses on accelerating responsible, safe, and robust AI adoption for enterprises, through evaluation of AI systems against emerging regulatory requirements. For enterprises, this means informed trust as they purchase third-party AI solutions. For AI vendors, it means getting a stamp of approval. Resaro’s mission is to enable an AI market worthy of trust.

As a founding and premier member of the AI Verify Foundation, Resaro is also committed to enhancing open-source tools that are globally interoperable as an enabler of trust in AI. Resaro sees AI Verify as a good way to document and assess AI systems across their lifecycle, examining key governance principles like explainability, robustness, and fairness.

Resaro tested AI Verify on:

AI model: Computer Vision Classification Model

Use case: Resaro used AI Verify as part of its service offering in evaluating third- party AI models. One of its clients wanted to assess the performance of third-party image classification models in the Singapore population context, prior to deploying the AI model. While vendors often share performance metrics, these are rarely tested locally. Hence, Resaro standardised the testing process, aligning the client vendor’s model against AI Verify, to ensure the model met operational requirements.

As part of the testing process, Resaro obtained the AI model’s output probabilities via an API interface and assessed it against AI Verify’s robustness and fairness tests. In this process, Resaro also utilised the AI Verify testing framework and process checklist as part of its evaluation.

Going beyond use to enhancing the toolkit

Through its experience in testing its clients’ AI models, Resaro found that it may not be necessary to run all the tests in the AI Verify toolkit. For example, testing for robustness could be run in a black-box manner, while testing for model explainability, for instance, would require information from the client or its vendor. To address the need to run only specific tests, Resaro worked with IMDA and the Foundation to simplify the architecture of the AI Verify toolkit to allow tests to be run as standalone modules to better suit the testing scenario.

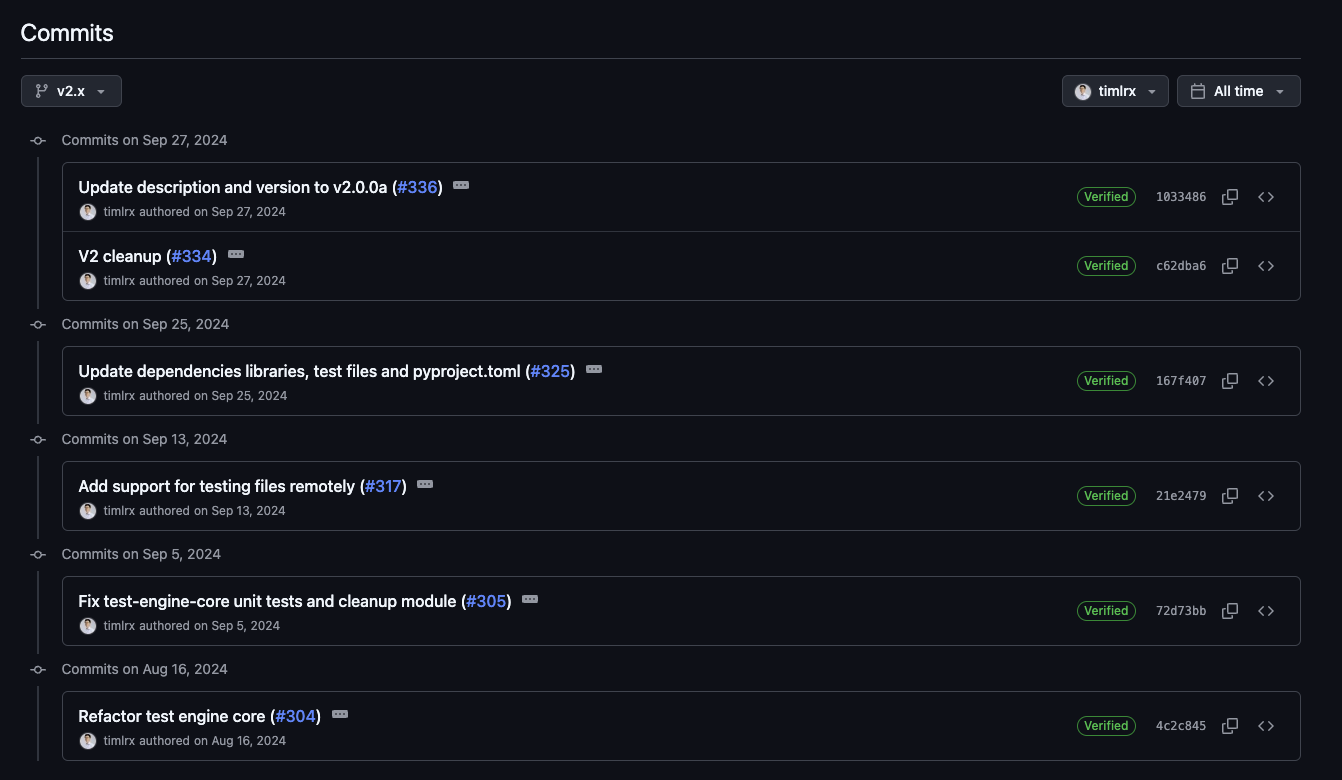

Resaro also worked with IMDA and the Foundation to improve AI Verify toolkit code and develop new plugins. These changes lower the barrier for anyone to install and run the tests. They are available for direct installation from PyPI as part of AI Verify 2.0.

Besides helping to simplify the toolkit architecture, support for common computer vision frameworks like PyTorch and TensorFlow, as well as enabling uploads of testing artefacts such as images were added. These helped in streamlining the installation, use, and maintenance of test modules for developers, ensuring it remains useful to industry needs.

The process of contributing to AI Verify has been a simple and seamless one. After aligning on the desired outcomes with the team maintaining the open-source code base, Resaro implemented the new modules and technical tests. These implementations were backed by comprehensive testing and documentation to ensure they met the required standards. Following this, Resaro submitted a pull request, and its changes were accepted and merged into the main AI Verify repository on GitHub after a review by the maintainers.

Key learnings on AI testing to share with the community

For companies who want to deploy AI, it is useful to have a pre-deployment testing plan from the outset of the project, outlining the areas to be covered and the requirements the AI solution must meet. The AI Verify framework, along with other relevant industry standards and guidelines, offer a good starting point for business owners to consider the various factors that need to be addressed.

As a third-party assurance provider, the challenge often lies in standardising and normalising performance metrics across different vendors. Conducting an independent test on an organisation’s own dataset is an effective way to gain a true understanding of the trade-offs between solutions, beyond the performance metrics stated by the vendor.

Demonstrating trustworthy AI is essential, while helping organisations stay competitive and compliant in an increasingly AI-driven world. Resaro is actively contributing to open-source developments in this space and encourages others to join this vital effort. By fostering collaboration, we can build and attest to AI solutions that not only enhance performance but also uphold ethical standards and public trust. Let’s work together to shape a future where AI benefits everyone!

Your organisation’s background – Could you briefly share your organisation’s background (e.g. sector, goods/services offered, customers), AI solution(s) that has/have been developed/used/deployed in your organisation, and what it is used for (e.g. product recommendation, improving operation efficiency)?

Your AI Verify use case – Could you share the AI model and use case that was tested with AI Verify? Which version of AI Verify did you use?

Your experience with AI Verify – Could you share your journey in using AI Verify? For example, preparation work for the testing, any challenges faced, and how were they overcome? How did you find the testing process? Did it take long to complete the testing?

Your key learnings and insights – Could you share key learnings and insights from the testing process? For example, 2 to 3 key learnings from the testing process? Any actions you have taken after using AI Verify?